Lasso regression is a type of linear regression that is used for feature selection and regularization in machine learning. It is also known as the Least Absolute Shrinkage and Selection Operator (LASSO). This technique is used to handle multicollinearity, which is when there is high correlation between predictor variables in a regression model. Lasso regression is a powerful tool for building predictive models and can help improve the accuracy and interpretability of the results. Lasso Regression

The term "kitchen sink" is often used in reference to regression analysis to describe a model that includes all possible predictor variables, regardless of their significance or relevance. This approach is also known as "throwing everything in but the kitchen sink". While it may seem like a comprehensive and thorough approach, it can actually lead to overfitting and a lack of interpretability in the results. Kitchen Sink

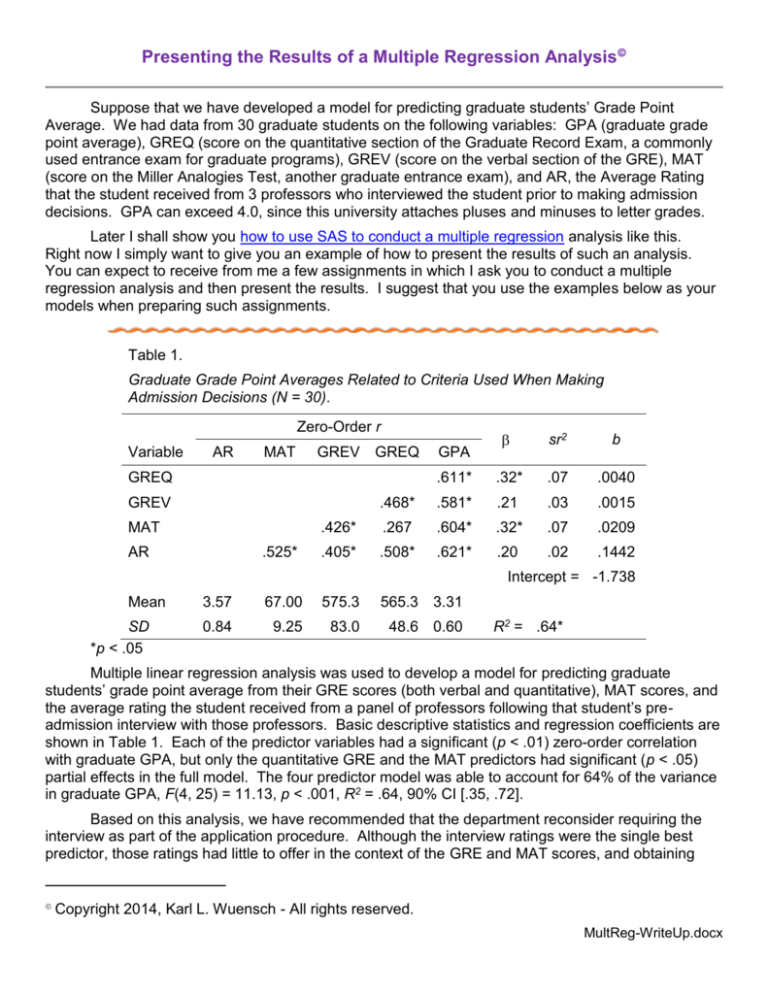

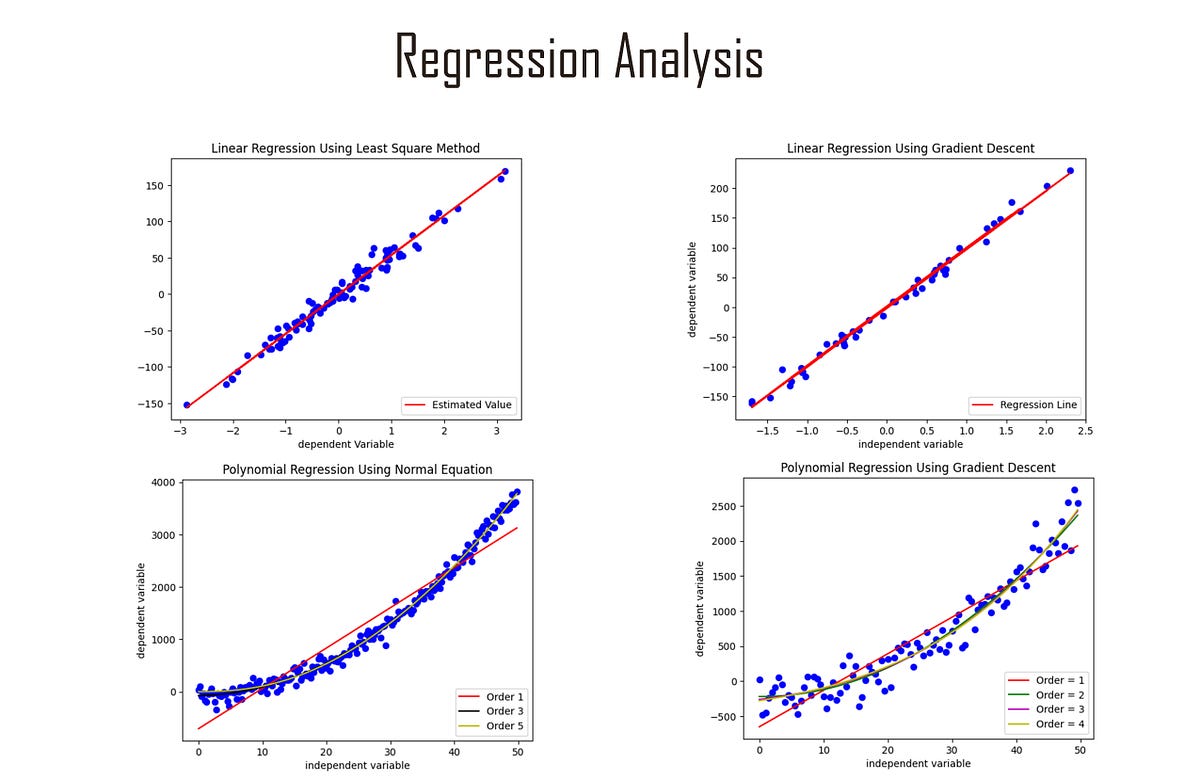

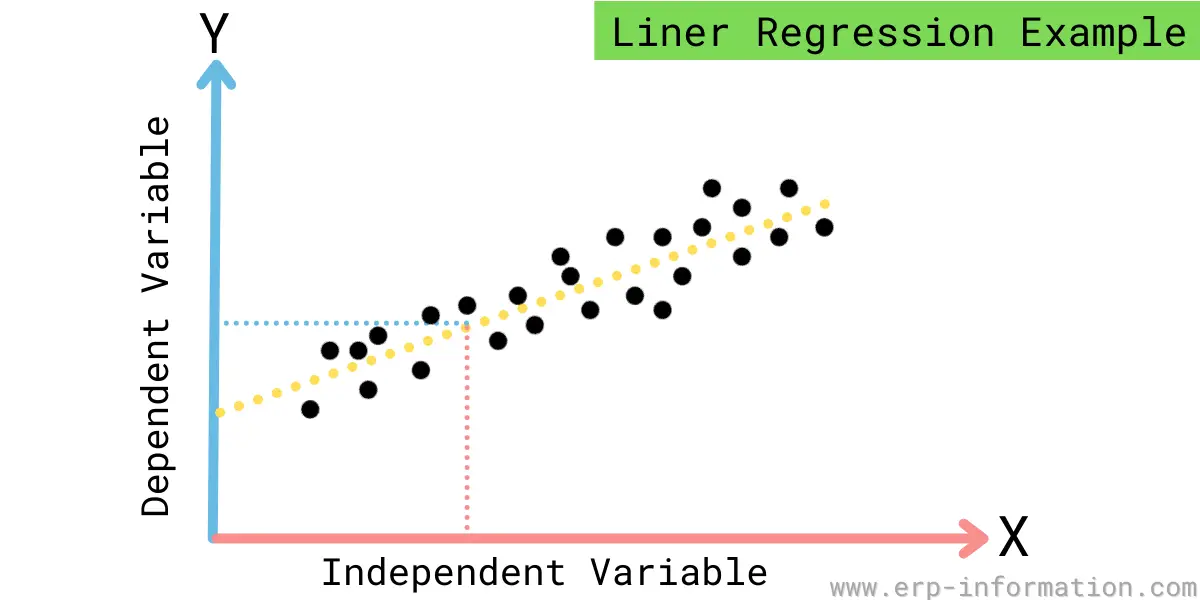

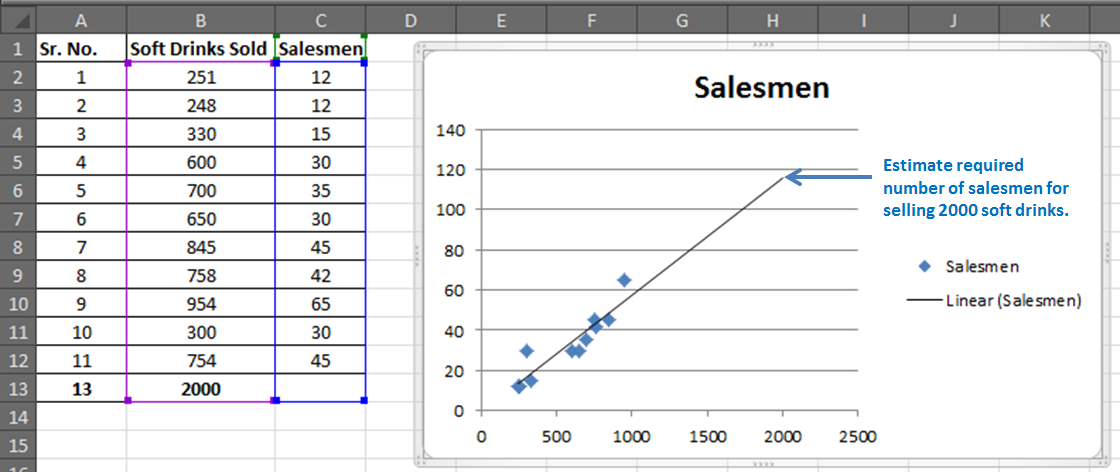

Regression analysis is a statistical technique used to explore the relationship between a dependent variable and one or more independent variables. It is commonly used in data analysis and predictive modeling to identify patterns and make predictions about future outcomes. There are many different types of regression analysis, including lasso regression and kitchen sink regression, each with their own benefits and drawbacks. Regression Analysis

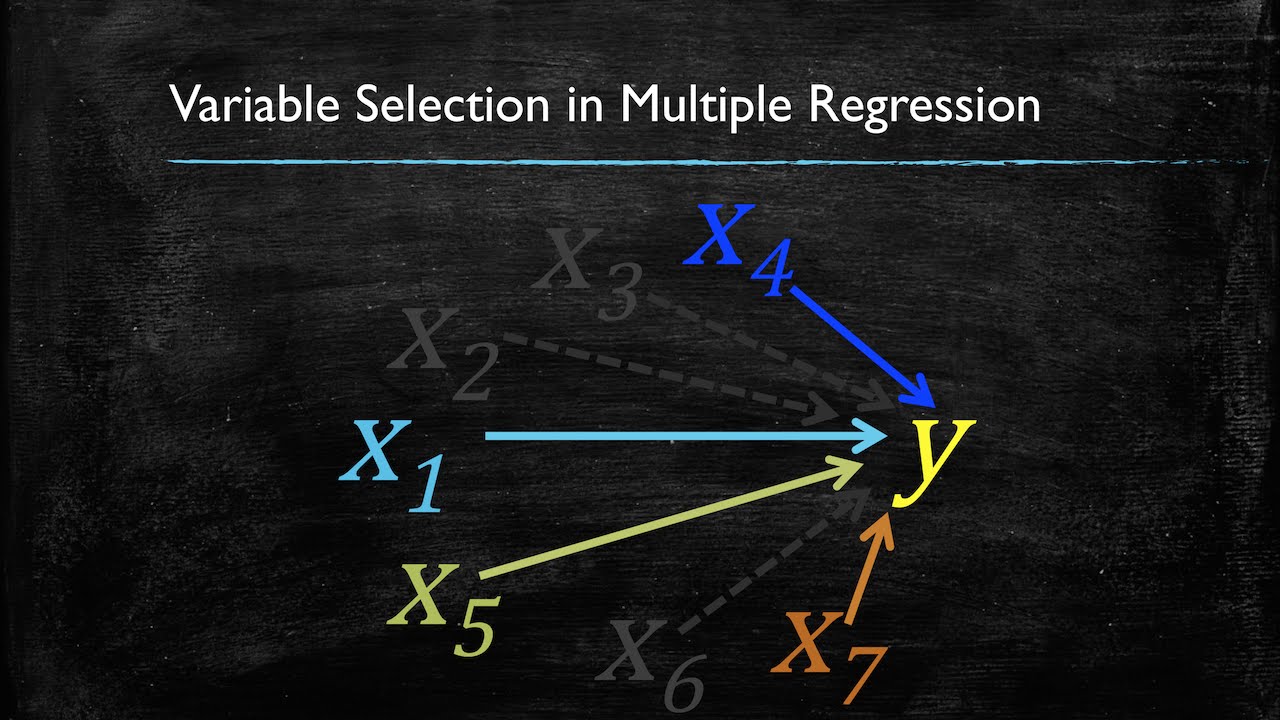

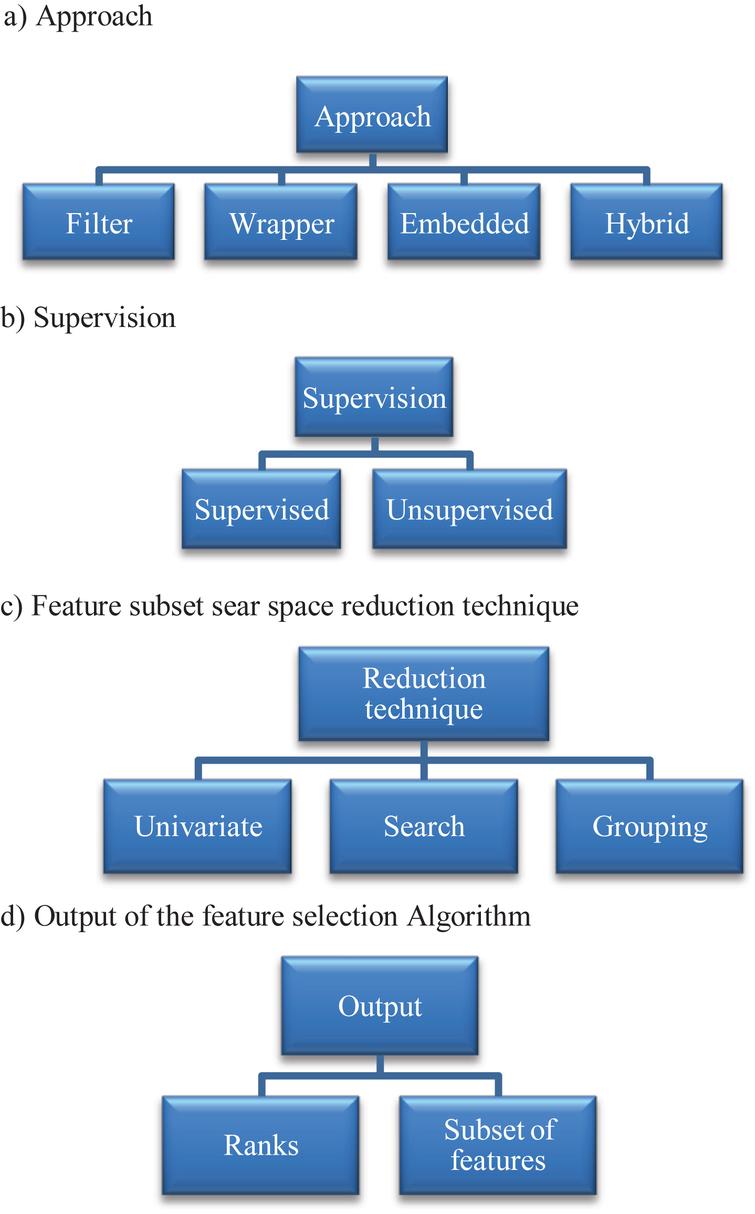

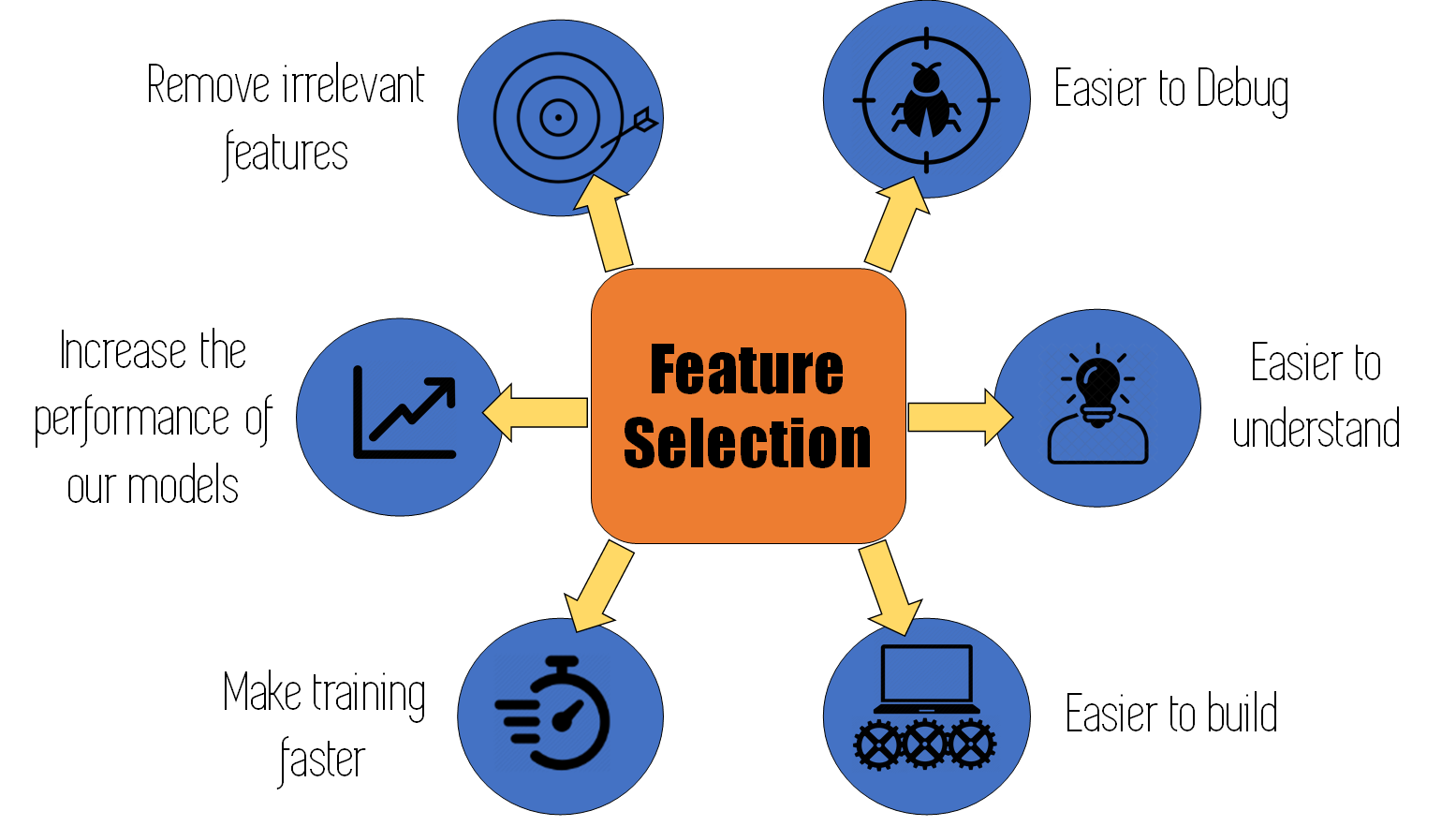

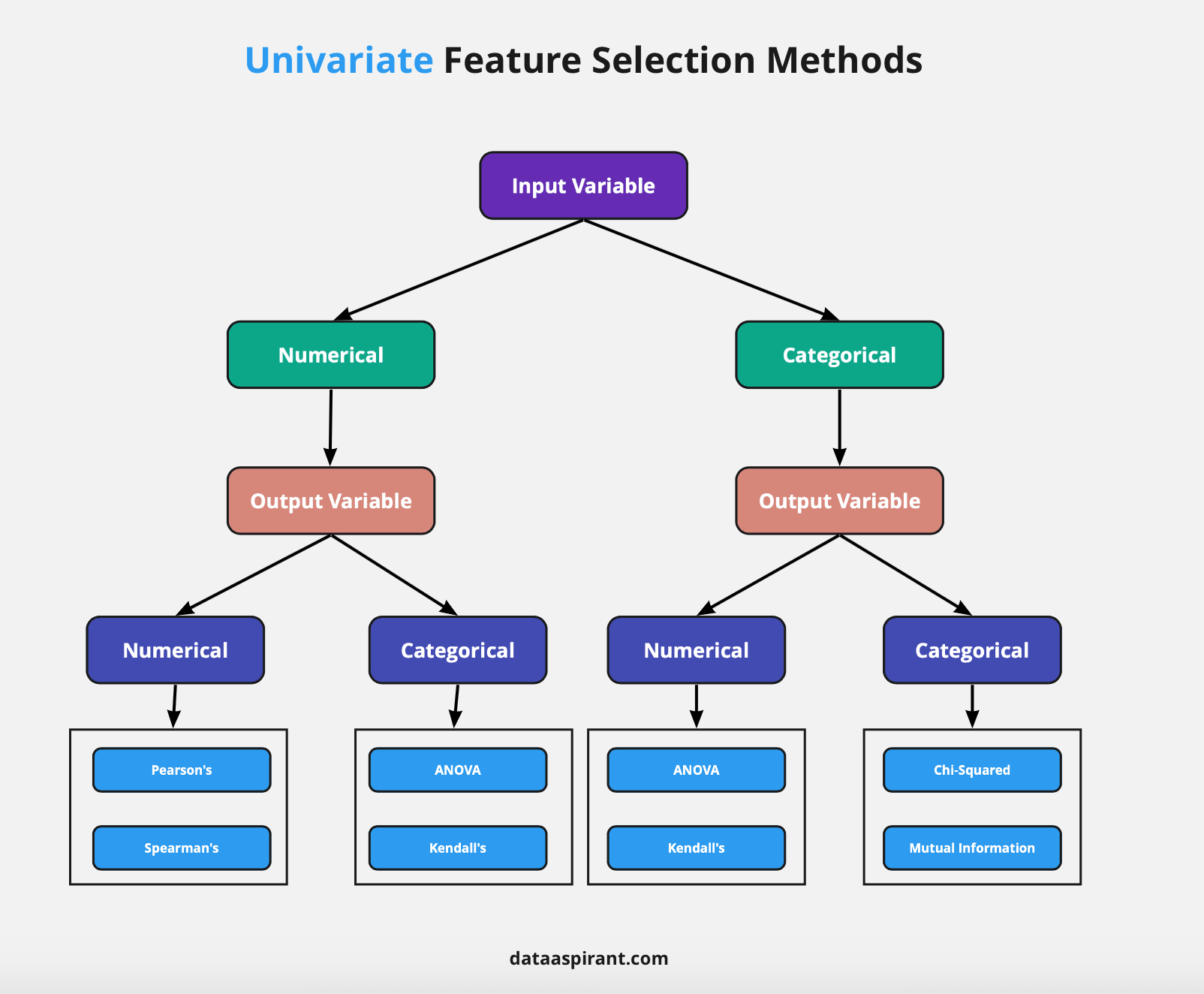

Feature selection is the process of selecting the most relevant and significant features from a dataset to use in a predictive model. This is important because including irrelevant or redundant features can lead to overfitting and impact the accuracy and interpretability of the results. Lasso regression is a popular technique for feature selection as it can automatically identify and remove irrelevant features. Feature Selection

Machine learning is a subset of artificial intelligence that involves using algorithms and statistical models to enable computers to learn from data and make predictions or decisions without being explicitly programmed. Regression analysis is a commonly used technique in machine learning for supervised learning, where the model is trained on a dataset with known outcomes and then used to make predictions on new data. Lasso regression is a popular technique in machine learning for its ability to handle large datasets and high-dimensional data. Machine Learning

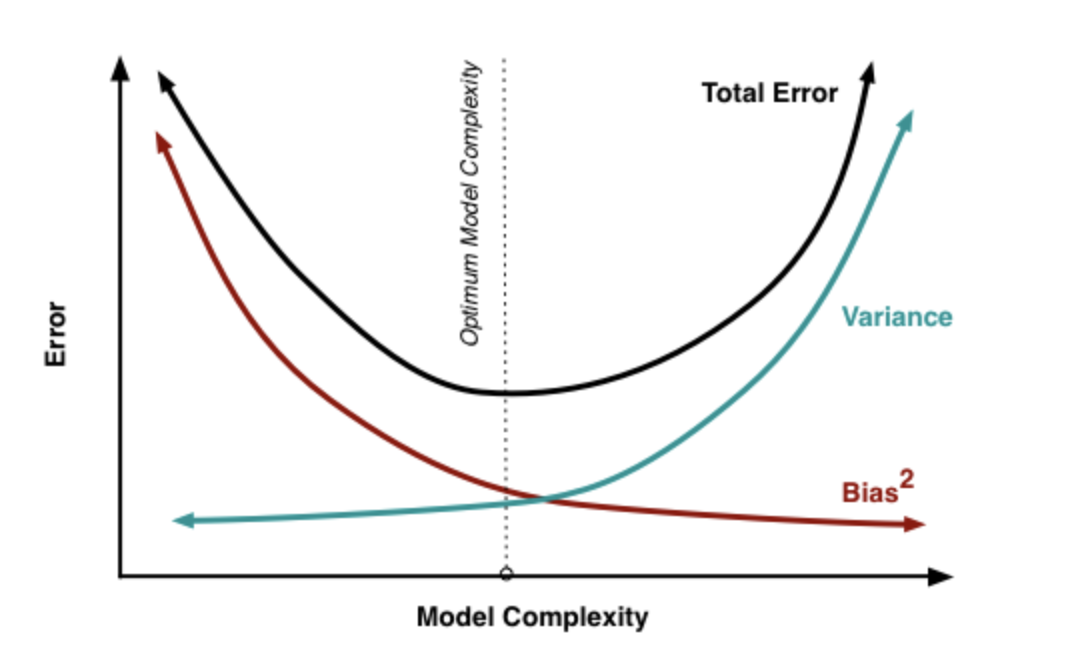

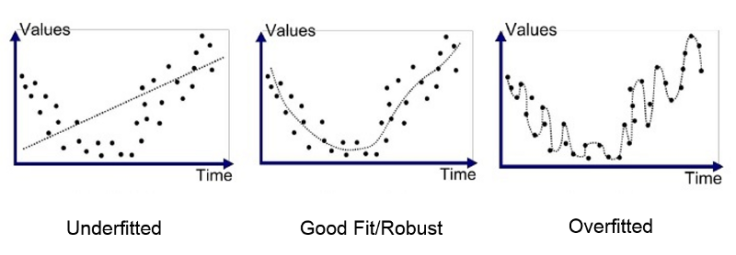

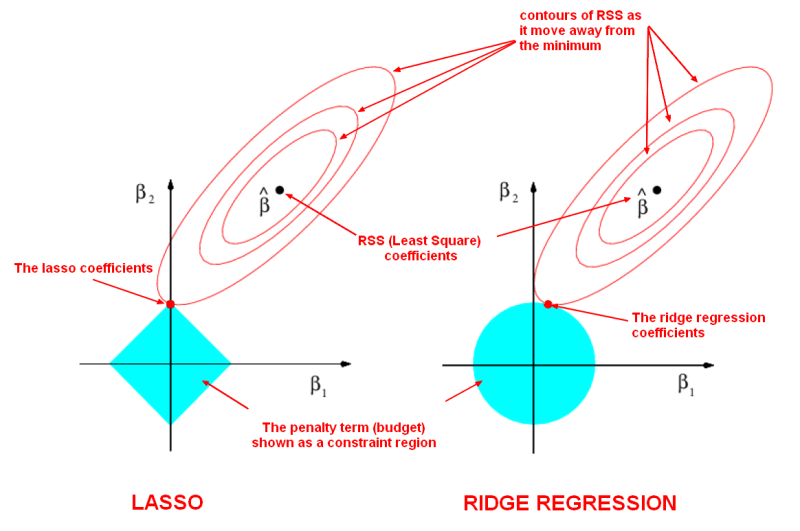

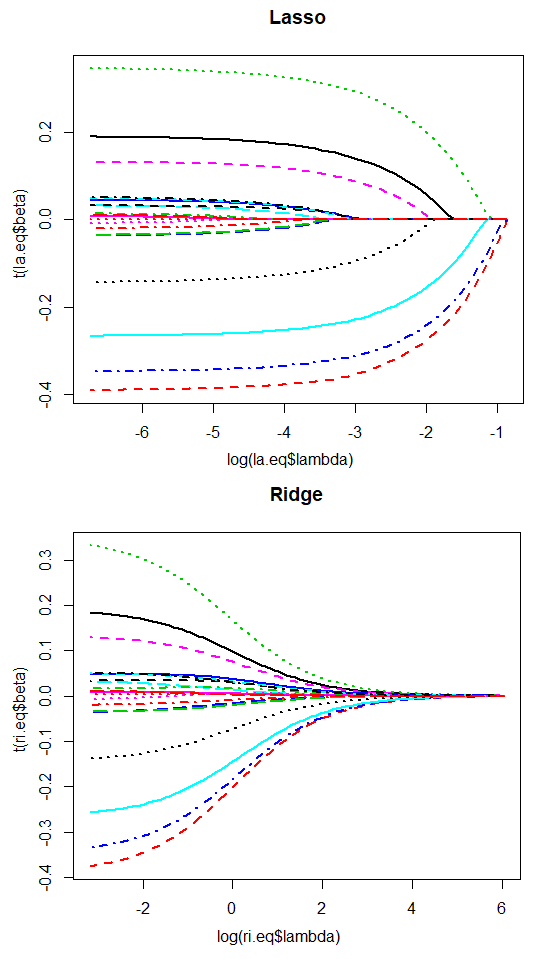

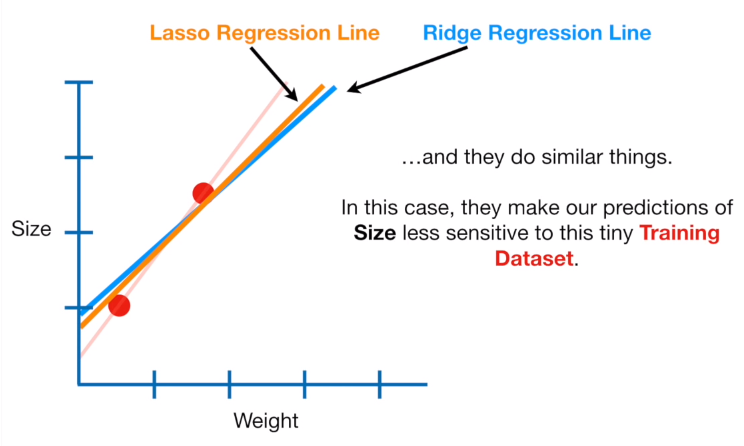

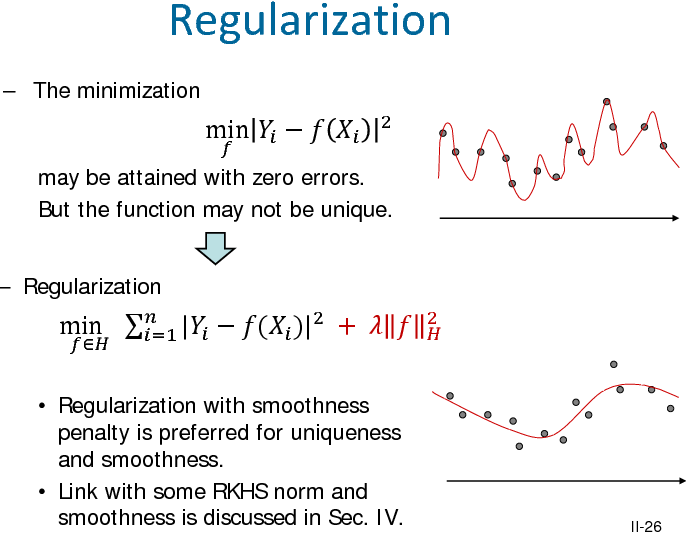

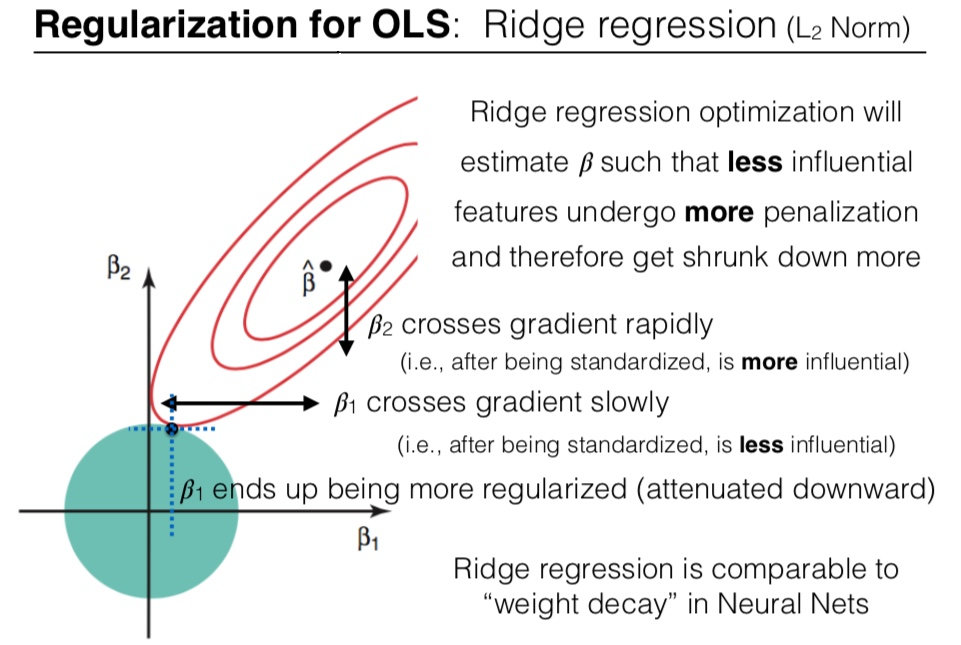

Regularization is a technique used in regression analysis to prevent overfitting and improve the generalizability of the model. It involves adding a penalty term to the model's cost function that penalizes complex models with many predictor variables. Lasso regression uses a type of regularization called L1 regularization, which shrinks the coefficients of irrelevant or redundant features to zero, effectively removing them from the model. Regularization

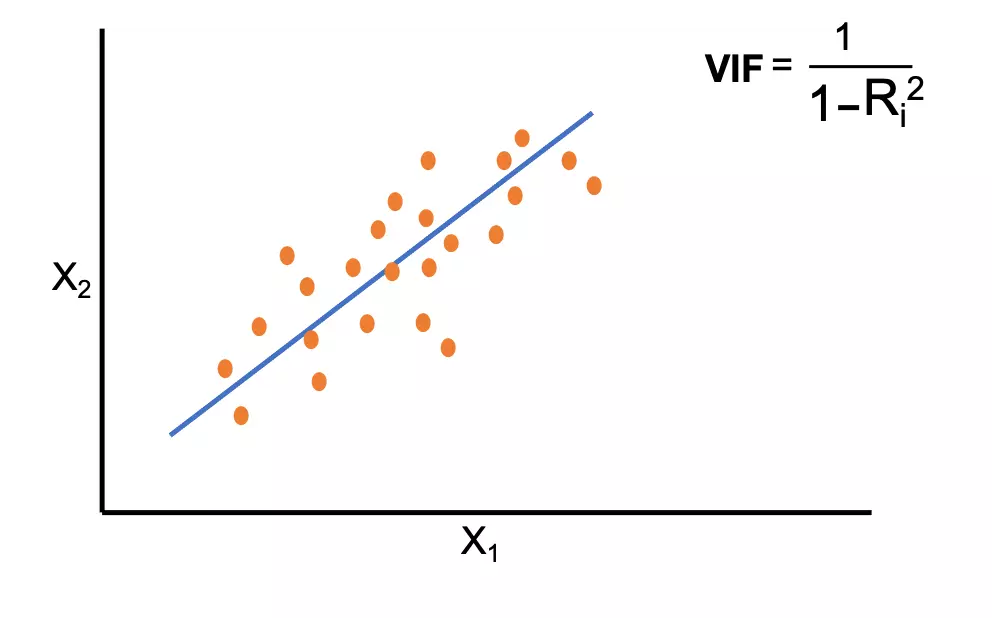

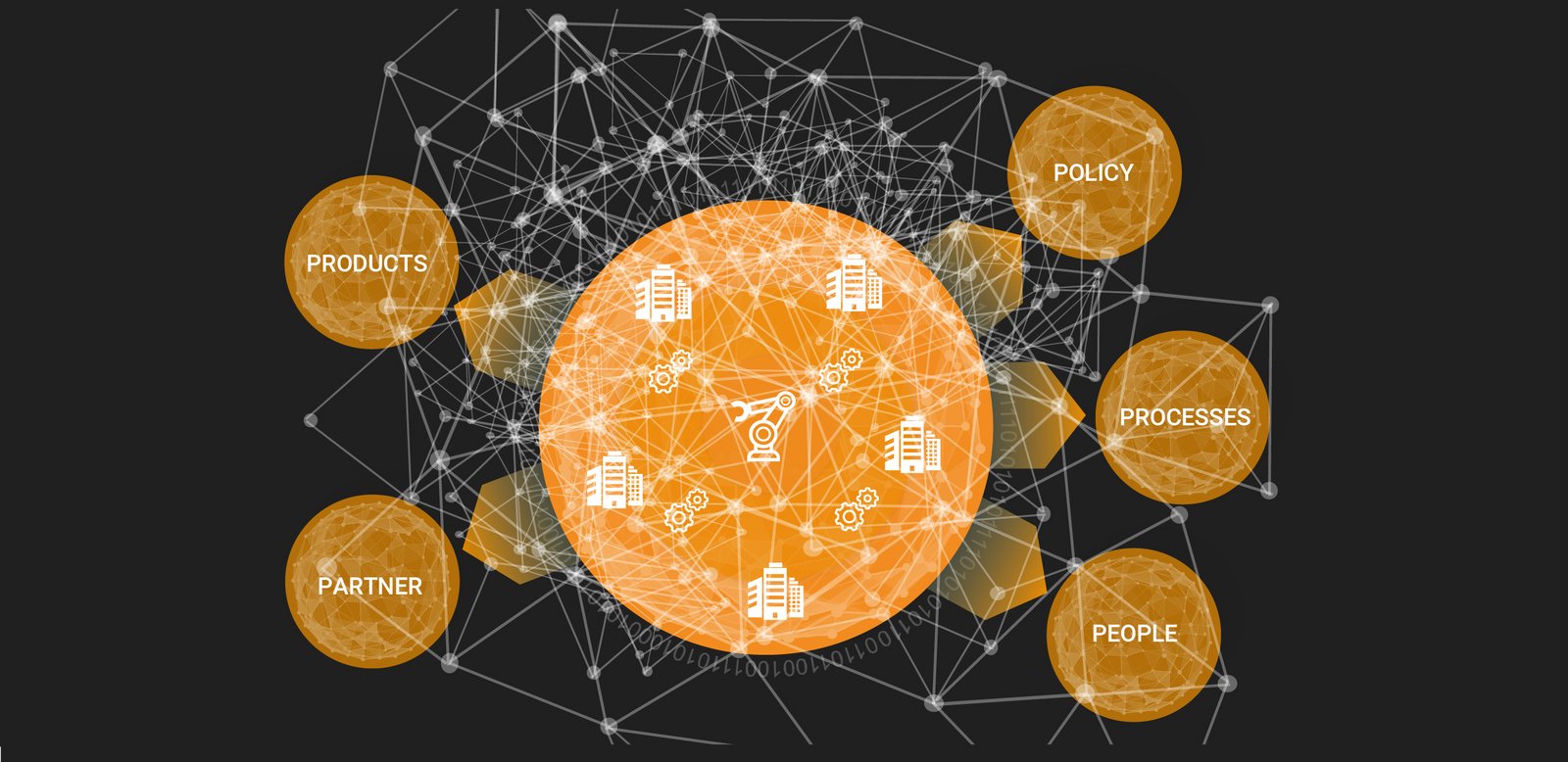

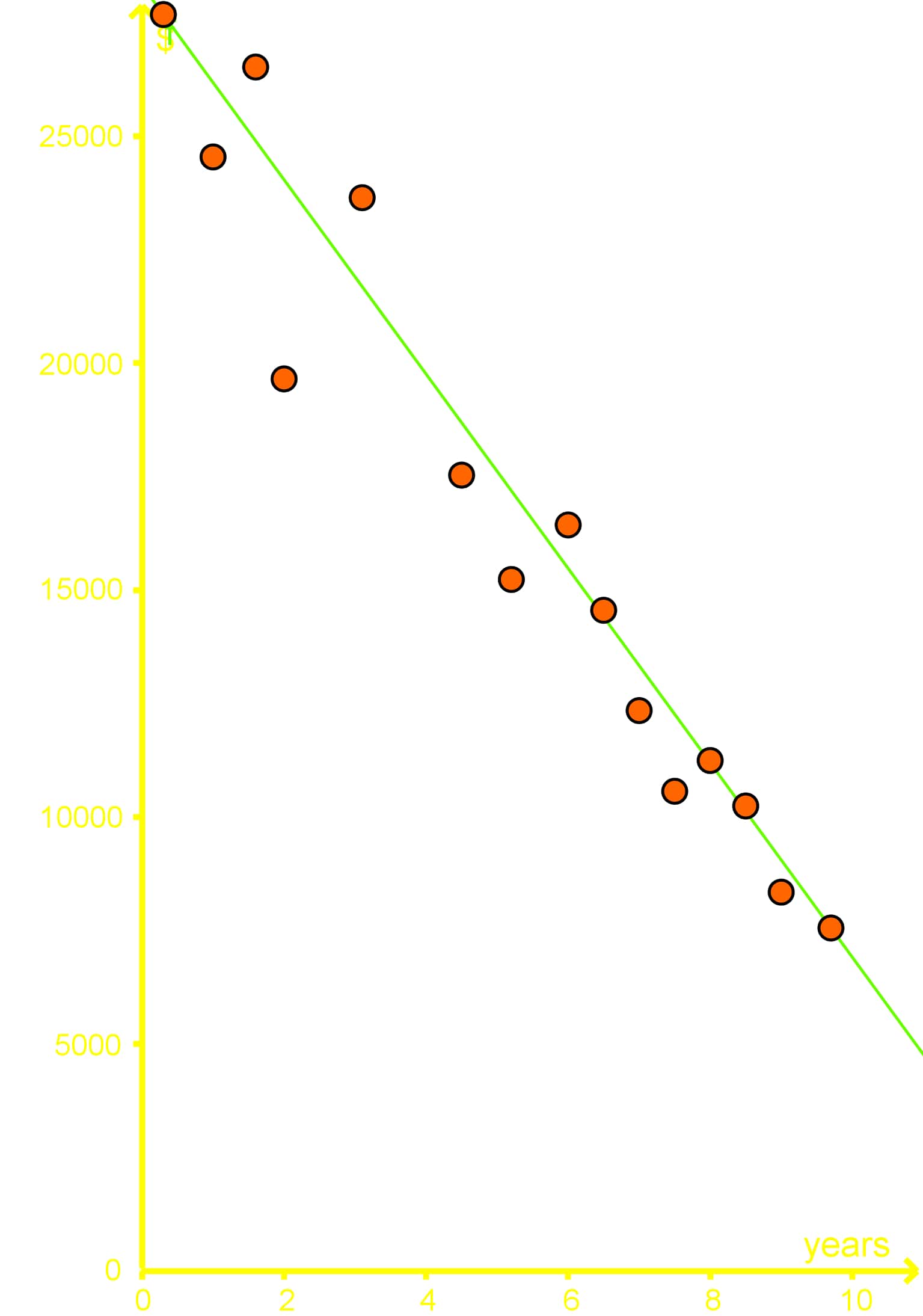

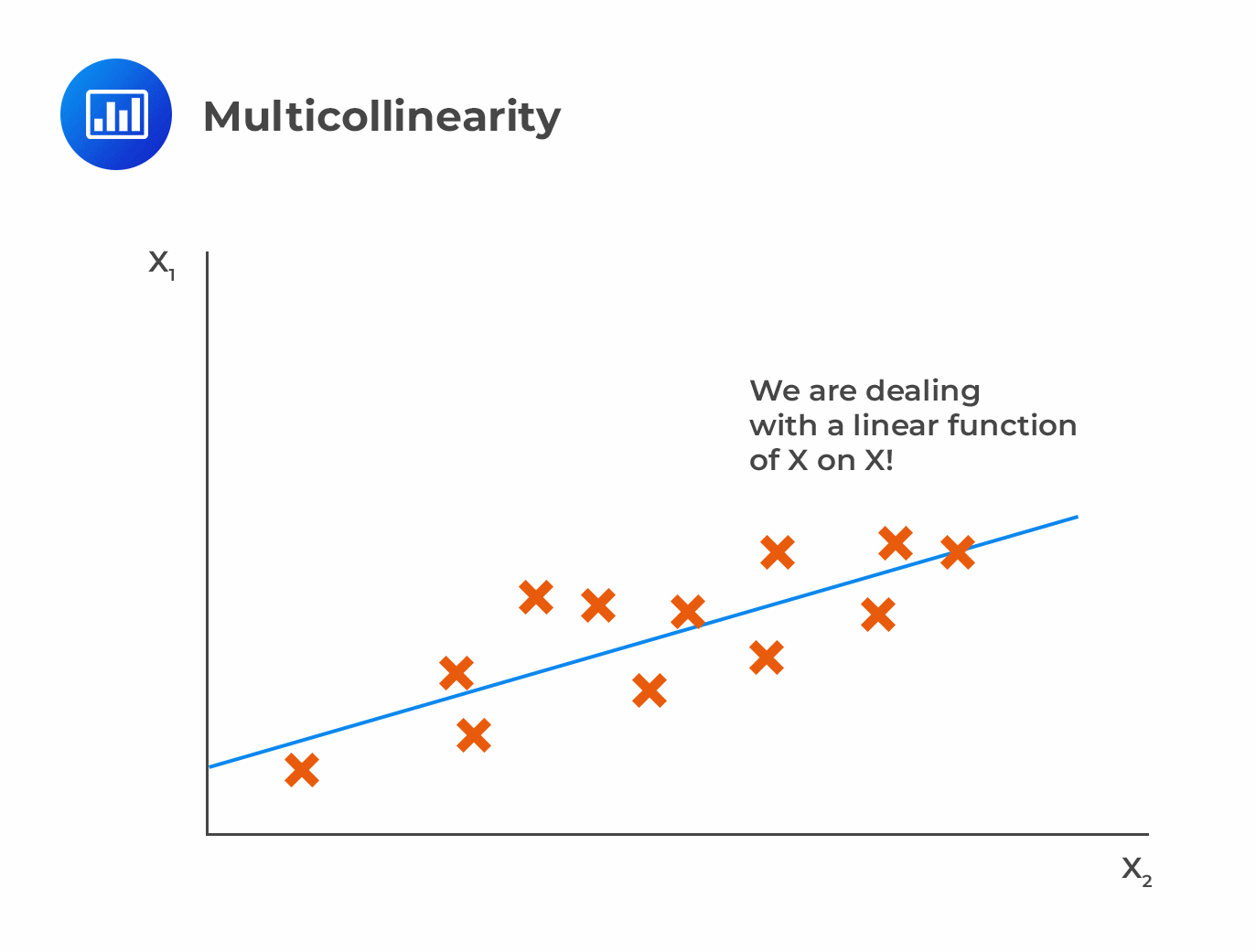

Multicollinearity is a common issue in regression analysis where there is a high degree of correlation between predictor variables. This can lead to unreliable and unstable estimates of the coefficients, and make it difficult to interpret the results. Lasso regression is a useful technique for handling multicollinearity as it can automatically select the most relevant features and reduce the impact of highly correlated variables. Multicollinearity

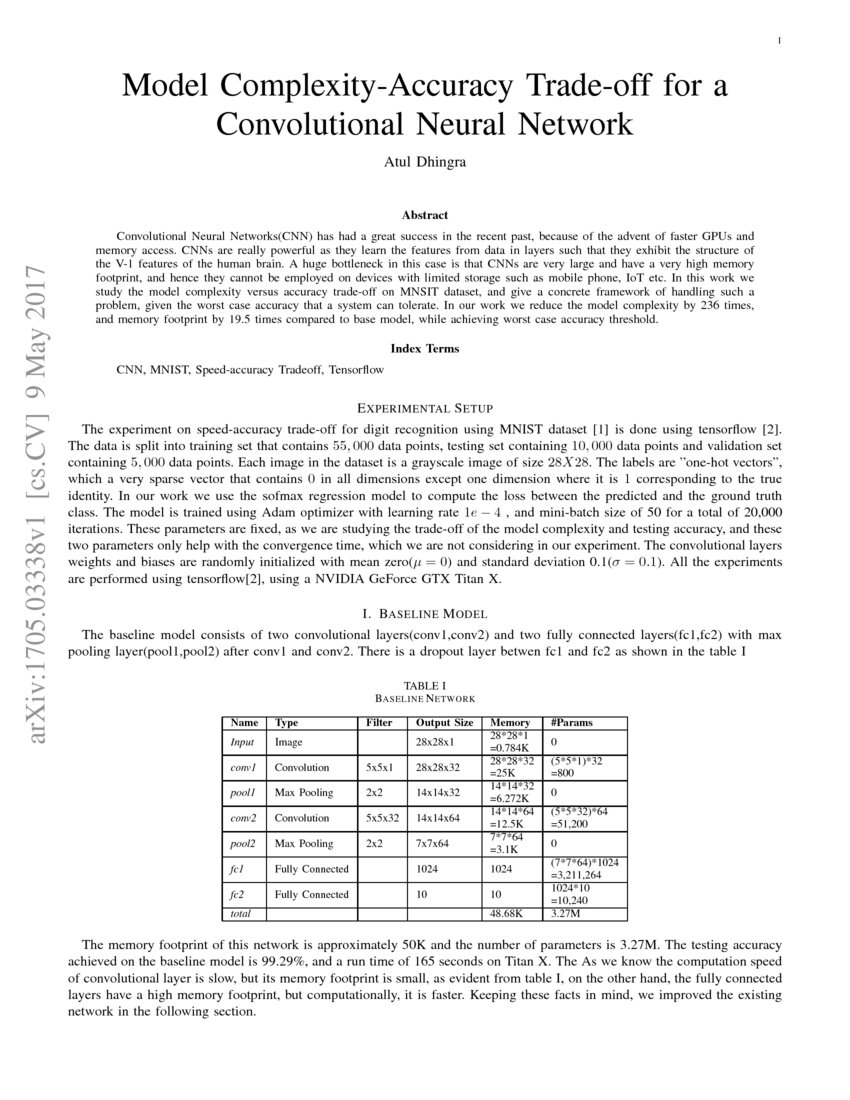

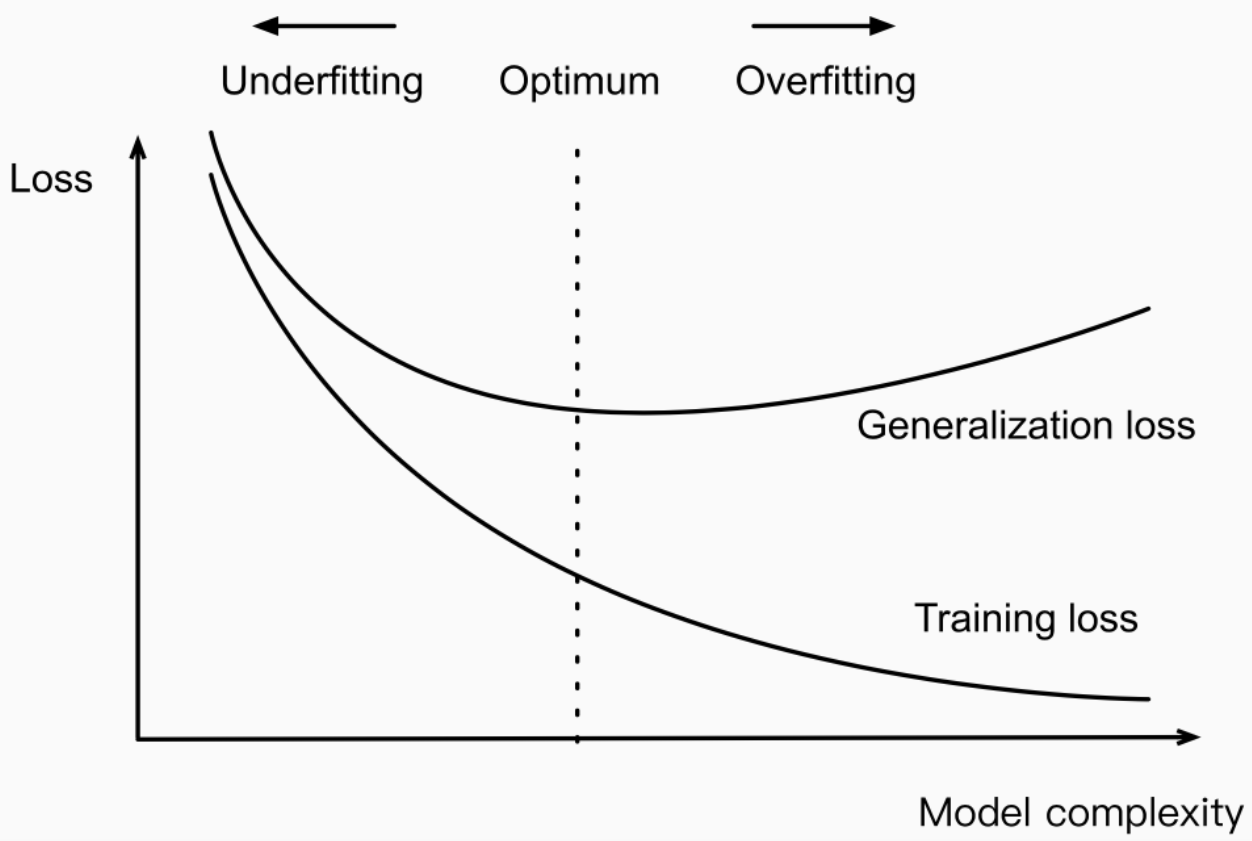

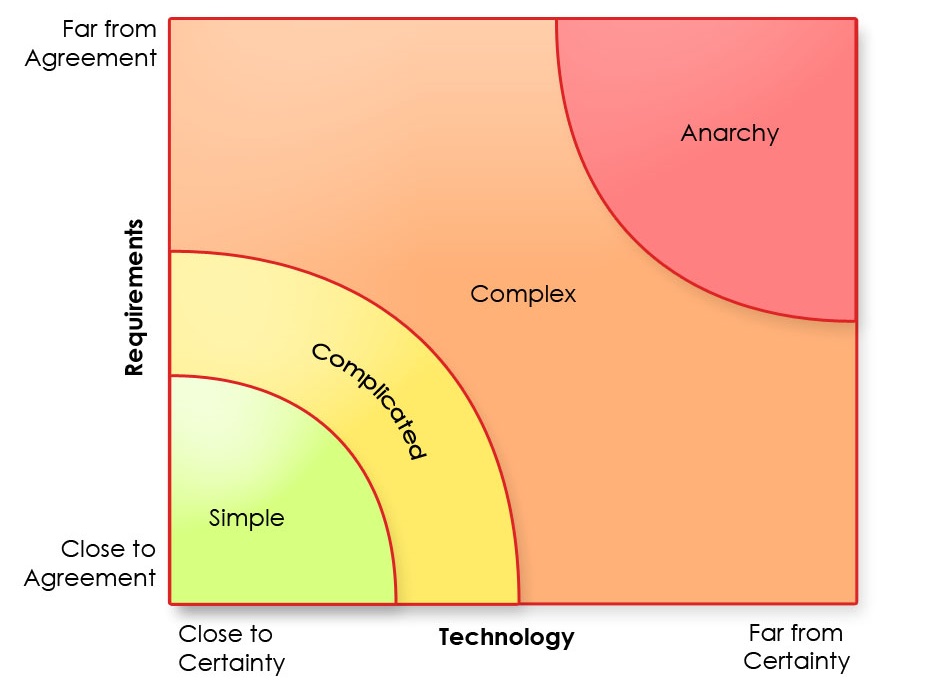

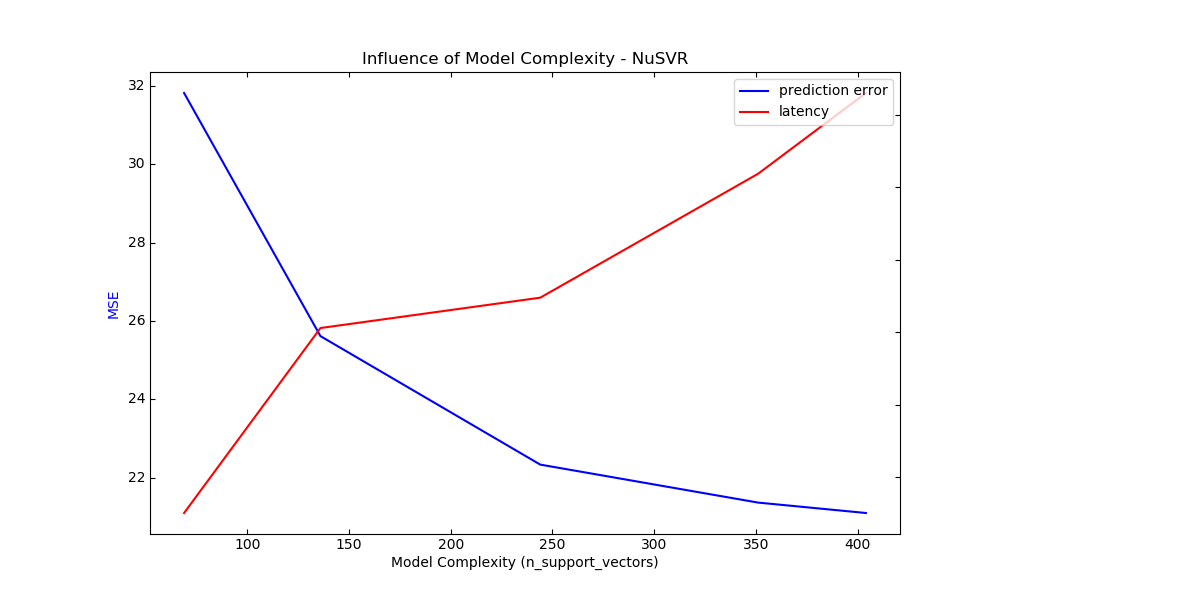

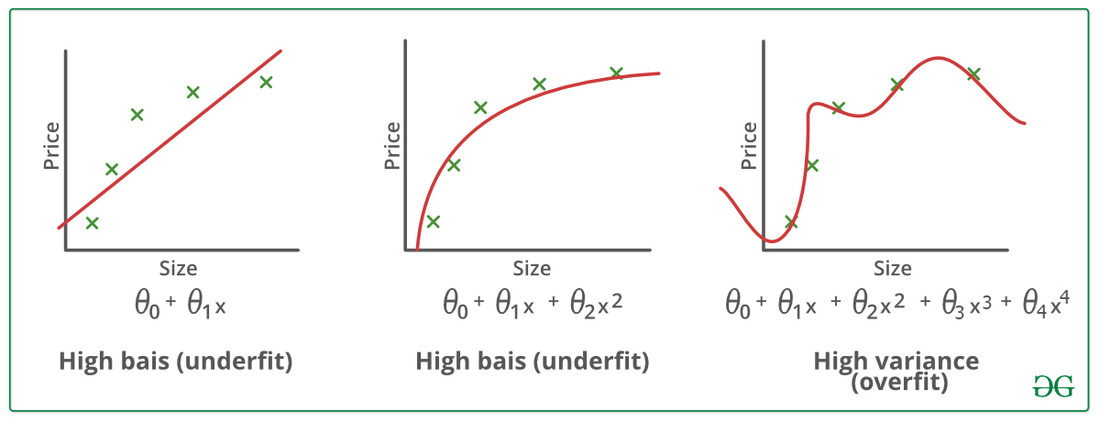

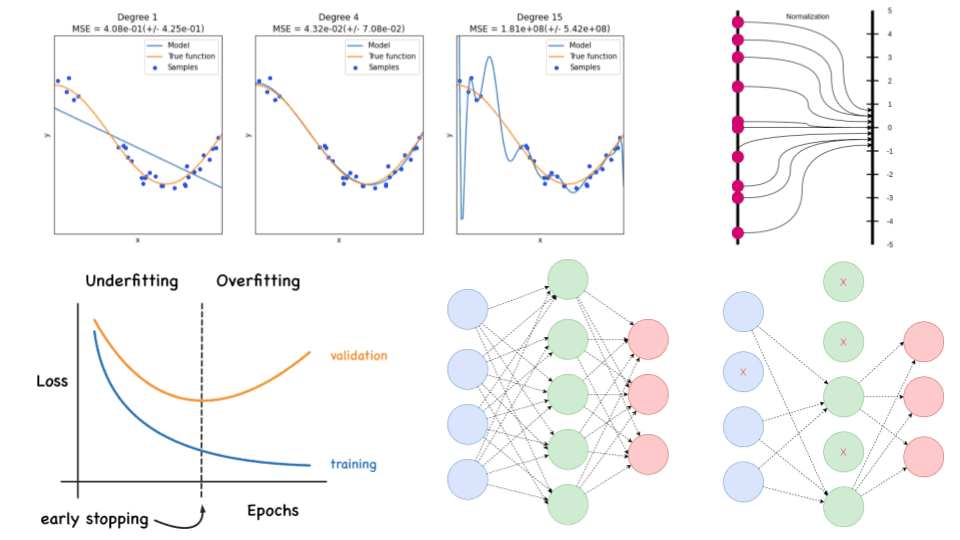

Model complexity refers to the number of predictor variables and the interactions between them in a regression model. A complex model with many predictor variables can lead to overfitting and make it difficult to interpret the results. Lasso regression is a useful tool for reducing model complexity by automatically selecting the most relevant features and shrinking the coefficients of irrelevant or redundant variables. Model Complexity

Variable selection is an important step in regression analysis as it involves choosing the most relevant and significant features to include in the model. This is important because including irrelevant or redundant features can lead to overfitting and impact the accuracy and interpretability of the results. Lasso regression is a popular technique for variable selection as it can automatically identify and remove irrelevant features. Variable Selection

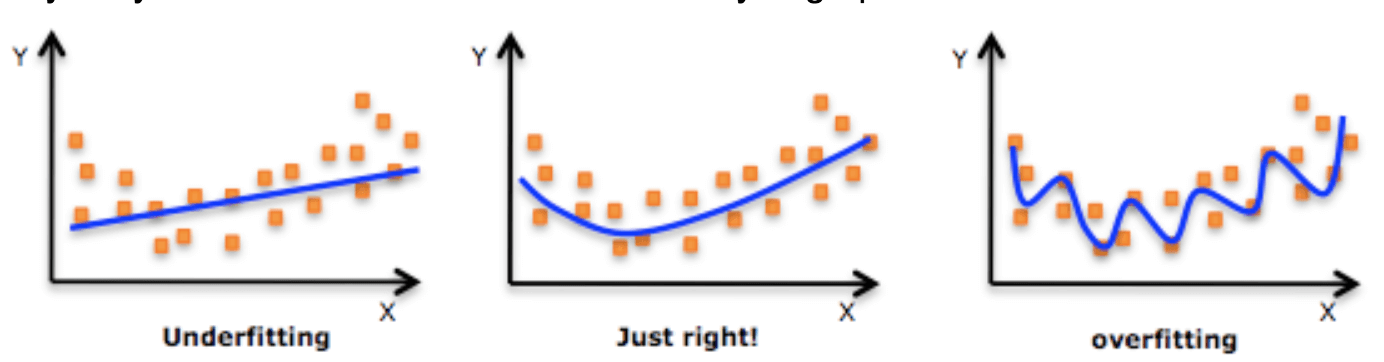

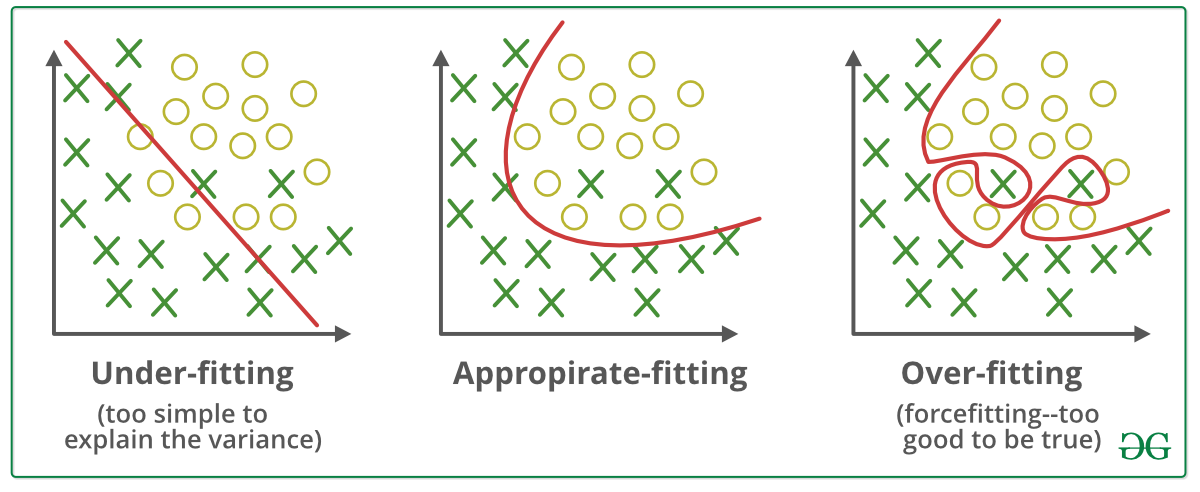

Overfitting is a common issue in machine learning where the model performs well on the training data but fails to generalize to new data. This can occur when the model is too complex and captures the random noise or idiosyncrasies in the training data. Lasso regression is a useful technique for preventing overfitting as it reduces the model's complexity and automatically selects the most relevant features. Overfitting

The Battle of Lasso Regression vs Kitchen Sink in House Design

Introduction to House Design

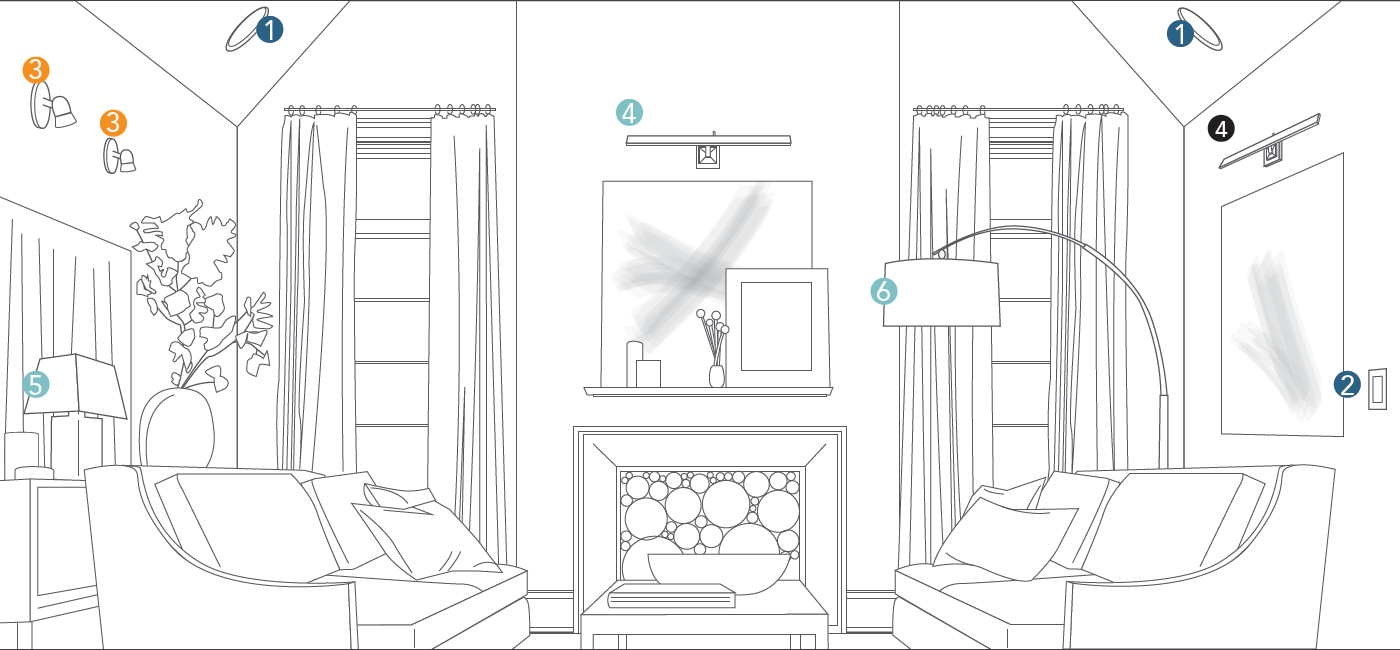

When it comes to designing a house, there are numerous factors that need to be taken into consideration. From the layout, color scheme, furniture placement, and even the smallest details like doorknobs and light fixtures, everything plays a crucial role in creating a functional and aesthetically pleasing living space. However, with so many options and techniques available, it can be overwhelming and challenging to decide which design approach to take. In recent years, two methods that have gained popularity in the world of house design are

lasso regression

and

kitchen sink

. Let's dive into the world of house design and explore the differences between these two techniques.

When it comes to designing a house, there are numerous factors that need to be taken into consideration. From the layout, color scheme, furniture placement, and even the smallest details like doorknobs and light fixtures, everything plays a crucial role in creating a functional and aesthetically pleasing living space. However, with so many options and techniques available, it can be overwhelming and challenging to decide which design approach to take. In recent years, two methods that have gained popularity in the world of house design are

lasso regression

and

kitchen sink

. Let's dive into the world of house design and explore the differences between these two techniques.

The Basics of Lasso Regression

Lasso regression

is a statistical method used to analyze and predict the relationship between different variables. In house design, this technique is used to identify the most essential and influential features that contribute to the overall design. It works by penalizing the coefficients of the variables that are not contributing significantly, resulting in a more simplified and efficient model. This approach is particularly useful when dealing with a large number of variables, as it helps filter out the unnecessary and focus on the crucial elements.

Lasso regression

is a statistical method used to analyze and predict the relationship between different variables. In house design, this technique is used to identify the most essential and influential features that contribute to the overall design. It works by penalizing the coefficients of the variables that are not contributing significantly, resulting in a more simplified and efficient model. This approach is particularly useful when dealing with a large number of variables, as it helps filter out the unnecessary and focus on the crucial elements.

The All-Inclusive Approach of Kitchen Sink

On the other hand, the

kitchen sink

approach takes a more all-inclusive and comprehensive approach to house design. As the name suggests, this technique involves incorporating every possible feature and design element into the final product. This approach is often seen in eclectic or maximalist designs, where every corner of the house is adorned with different patterns, colors, and textures. As opposed to lasso regression, kitchen sink allows for more creativity and personalization in the design process, as no element is considered insignificant.

On the other hand, the

kitchen sink

approach takes a more all-inclusive and comprehensive approach to house design. As the name suggests, this technique involves incorporating every possible feature and design element into the final product. This approach is often seen in eclectic or maximalist designs, where every corner of the house is adorned with different patterns, colors, and textures. As opposed to lasso regression, kitchen sink allows for more creativity and personalization in the design process, as no element is considered insignificant.

Which One is Better?

Both lasso regression and kitchen sink have their advantages and disadvantages when it comes to house design. Lasso regression allows for a more streamlined and efficient design process, while kitchen sink offers more room for creativity and personalization. Ultimately, the best approach depends on the homeowner's preferences and the style of the house. Some may prefer a simpler, more minimalistic design, while others may enjoy a more eclectic and bold look. Regardless of the approach chosen, what matters most is creating a space that reflects the homeowner's personality and meets their functional needs.

Both lasso regression and kitchen sink have their advantages and disadvantages when it comes to house design. Lasso regression allows for a more streamlined and efficient design process, while kitchen sink offers more room for creativity and personalization. Ultimately, the best approach depends on the homeowner's preferences and the style of the house. Some may prefer a simpler, more minimalistic design, while others may enjoy a more eclectic and bold look. Regardless of the approach chosen, what matters most is creating a space that reflects the homeowner's personality and meets their functional needs.

Conclusion

In conclusion, both lasso regression and kitchen sink have their place in house design, and both have their unique benefits. Lasso regression helps identify the most crucial design elements, while kitchen sink allows for more creativity and personalization. As with any design process, it's essential to find a balance between the two and create a space that is both functional and visually appealing. With the right combination of techniques and design elements, a beautiful and comfortable home can be achieved.

In conclusion, both lasso regression and kitchen sink have their place in house design, and both have their unique benefits. Lasso regression helps identify the most crucial design elements, while kitchen sink allows for more creativity and personalization. As with any design process, it's essential to find a balance between the two and create a space that is both functional and visually appealing. With the right combination of techniques and design elements, a beautiful and comfortable home can be achieved.

:max_bytes(150000):strip_icc()/RegressionBasicsForBusinessAnalysis2-8995c05a32f94bb19df7fcf83871ba28.png)

:max_bytes(150000):strip_icc()/multicollinearity.asp-Final-101b119d086e48afb97059bca383e487.jpg)